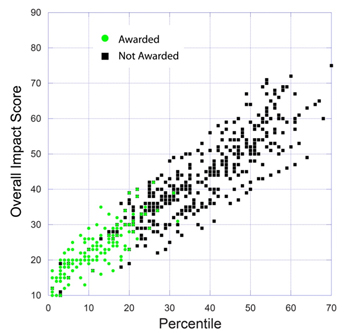

In response to a previous post, a reader requested a plot showing impact score versus percentile for applications for which funding decisions have been made. Below is a plot for 655 NIGMS R01 applications reviewed during the January 2010 Council round.

This plot confirms that the percentile representing the halfway point of the funding curve is slightly above the 20th percentile, as expected from previously posted data.

Notice that there is a small number of applications with percentile scores better than the 20th percentile for which awards have not been made. Most of these correspond to new (Type 1, not competing renewal) applications that are subject to the NIGMS Council’s funding decision guidelines for well-funded laboratories.

This is fascinating, and you and NIGMS are to be commended for this transparency! Please challenge your colleagues at the other ICs to meet this laudable standard!!

Just out of curiosity, why would NIGMS accept assignment of a Type 1 application that is never going to be funded by virtue of the “well-funded” policy?

Also, although the resolution of the image is poor, it looks like some of the dots have a slightly different shape/size. Are those depicting multiple apps with the same combination of impact score and percentile?

With regard to your first question: When we accept a new application from a well-funded investigator, we do not know whether we will fund it or not. If it does well in peer review, then the program director does an analysis of the science compared to the other funded research of the investigator and this is presented to our advisory council. A substantial number of such applications are, in fact, funded. Furthermore, the funding threshold is at the time of funding and this depends on the just-in-time information. Funding levels can move up and down substantially between application submission and council review.

With regard to your second comment: There are only green circles and black squares. If more than one application falls at the same percentile and impact score, these symbols are completely overlapping.

Fantastic info!

I wish the other institutes, especially for me the NCI, were more forthcoming.

Fascinating! The applications with the best percentile score have the lowest overall impact. This is clearly giving indications that the review process needs to be fixed. By the way, the recent changes are only cosmetic in nature and fail to address the problem.

Oscar, this is exactly as it should be. The lowest overall impact score on the 1-9 scale is intended to reflect the greatest overall impact.

Oscar, I think you are misunderstanding the overall impact score. A low number means a very good score, i.e. low ‘impact score’ indicates a high impact project.

It looks to me as if the grey zone pickups may reflect an attempt to correct for study section variability in score/percentile relationships. Does this come into play in your decision making? Or are you just working from Programmatic priorities at that point? Also, now that you’ve cracked the door :-), how about color coding the New Investigator and ESI apps?

This makes no sense. Correcting for study section variability in “impact score inflation” is exactly the purpose of percentiling in the first place.

well you look at the 20-30 percentile by 20-40 overall impact rectangle and tell me what you are seeing, then, PP.

I’m seeing mostly unfunded grants, with a smattering of funded grants. The distribution of funded grants seem skewed towards the lower percentiles, and randomly distributed with respect to impact score. This is exactly what one expects if impact score is ignored in making funding decisions, and only percentile is considered (along with, of course, various non-numerical considerations).

Thanks for sharing the excellent information!

With regard to the “well-funded” policy, you mentioned that “A substantial number of such applications are, in fact, funded”. Then it is not very clear to me why some well-funded PIs still got funded and some were not that lucky (especially, for these two with percentile <5%). What is the major factor in "just-in-time" that makes the difference?

Thanks!

The policy states:

Support of Research in Well-Funded Laboratories

Well-funded laboratories are defined as those with over $750,000 in direct costs for research support, including the pending application.

Renewal (formerly, competing continuation) grant applications (Type 2s)

The Council expects the Institute to employ its usual standards and consideration for funding as it handles renewal applications from well-funded laboratories. The expectation is that Type 2 applications from well-funded laboratories will receive normal consideration for funding. Specific exceptions to this general policy will be discussed with the Council, and Institute staff will be guided by the sense of Council in making funding decisions.

New grant applications (Type 1s)

The Council expects the Institute to support new projects in well-funded laboratories only if they are highly promising and distinct from other funded work in the laboratory. The Institute’s default position is not to pay such applications. However, under special circumstances and with strong justification, staff may recommend overriding the default position. In order for the application to be funded, the Council must concur with the recommendation.

Budget considerations

The Council expects the Institute to implement, where appropriate, reasoned budget reductions greater than those dictated by the cost-management principles for competing awards made to well-funded laboratories.

The key point is that “The Council expects the Institute to support new projects in well-funded laboratories only if they are highly promising and distinct from other funded work in the laboratory.” Thus, program staff and Council members analyze how distinct the new application is from other projects in the well-funded investigator’s laboratory and may not recommend funding if the new application deals with research that is closely related to ongoing work supported by other grants.

The Impact Scores for the funded aps look skewed to the high side to me which is the reason I commented.

On reflection, I think PhysioProf has the right of it.

Just out of curiosity: where within this graph do new and early stage investigators show up? Would those be the ones with higher scores/percentiles still being funded? And if not, how do some grants with high percentile and impact scores still get funded?