A frequent topic of discussion at our Advisory Council meetings—and across NIH—is how to measure scientific output in ways that effectively capture scientific impact. We have been working on such issues with staff of the Division of Information Services in the NIH Office of Extramural Research. As a result of their efforts, as well as those of several individual institutes, we now have tools that link publications to the grants that funded them.

Using these tools, we have compiled three types of data on the pool of investigators who held at least one NIGMS grant in Fiscal Year 2006. We determined each investigator’s total NIH R01 or P01 funding for that year. We also calculated the total number of publications linked to these grants from 2007 to mid-2010 and the average impact factor for the journals in which these papers appeared. We used impact factors in place of citations because the time dependence of citations makes them significantly more complicated to use.

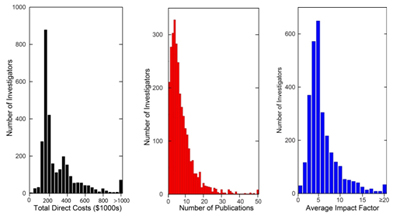

I presented some of the results of our analysis of this data at last week’s Advisory Council meeting. Here are the distributions for the three parameters for the 2,938 investigators in the sample set:

For this population, the median annual total direct cost was $220,000, the median number of grant-linked publications was six and the median journal average impact factor was 5.5.

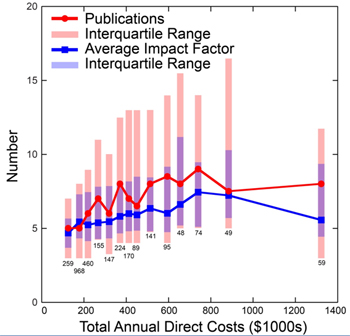

A plot of the median number of grant-linked publications and median journal average impact factors versus grant total annual direct costs is shown below.

This plot reveals several important points. The ranges in the number of publications and average impact factors within each total annual direct cost bin are quite large. This partly reflects variations in investigator productivity as measured by these parameters, but it also reflects variations in publication patterns among fields and other factors.

Nonetheless, clear trends are evident in the averages for the binned groups, with both parameters increasing with total annual direct costs until they peak at around $700,000. These observations provide support for our previously developed policy on the support of research in well-funded laboratories. This policy helps us use Institute resources as optimally as possible in supporting the overall biomedical research enterprise.

This is a preliminary analysis, and the results should be viewed with some skepticism given the metrics used, the challenges of capturing publications associated with particular grants, the lack of inclusion of funding from non-NIH sources and other considerations. Even with these caveats, the analysis does provide some insight into the NIGMS grant portfolio and indicates some of the questions that can be addressed with the new tools that NIH is developing.

Thank you for doing the difficult work of collecting this information on grant funding per lab versus productivity. The data appear to show what many scientists have argued for a long time: that once you exceed a certain ampount of funding per lab, the productivity per dollar actually decreases. Thus, allowing an infinite amount of funding per year to individual labs would appear to be counter-productive. I hope NIH will act on this important new information.

The limitations of Impact Factor methodologies are well-known, and are particularly prevalent in chemistry – important articles may be cited for decades after publication and some of the top tier journals, such as J. Am. Chem. Soc., do not publish reviews that would otherwise inflate their scores. One hopes that these numbers will not be taken too seriously, especially when evaluating the productivity of chemistry investigators, for which NIGMS is the major funding source within the NIH.

Dear Dr. Berg,

This is indeed an interesting if counterintuitive insight. Have you considered segregating by investigator initiated RO1 Vs. RO1 generated in response to RFA? Or use citations/paper in parallel to journal impact factor in assessing impact?

I realize that citations may not be available yet for work published in 2010, but impact factor favors trendy over impact and rewords selective journals (with strong editorial bias to what is “in”) over specialized journals in assigning factors. Citations of papers published in 2007-2009 reflect user appreciation.

Also, I have been struggling in my own mind how to translate the value of investment in basic research to a lay audience, especially in today’s political climate. Having failed to come up with a brilliant metric of my own, I thought it would be a good idea to ask NIGMS funded PIs for their views. One of our smart colleagues could come up with the perfect evaluation tool.

This is a fascinating analysis, and Dr. Berg is to be commended for sharing the data. My personal take is that it appears that $300-600K in annual funding seems to be the most productive. I wonder how the average individual R01 compares to PPGs, U19s and CTSAs in regards to productivity per dollar invested.

The very low slopes for publications/dollar and IF/dollar show the lack of equivalent value for anything but the smallest grants, with these imperfect metrics. Perhaps what’s occurring is that people found 1.5 papers/yr was the optimum for renewal. Have you considered splitting by Type 1 vs 2 awards?

These data, such as they are, do not support the contention that bigger labs are more productive (bang for the buck). Quite the contrary, you get 5 pubs with 200K a year and nowhere near 20 pubs with 4 times the support. The numbers are even worse as the funding levels increase over 800K.

How about productivity (by whatever measure you like) plotted vs grant priority score? NIGMS funds to ~20th percentile… is there any productivity difference within the top 20%?

Regarding the following:

“Nonetheless, clear trends are evident in the averages for the binned groups, with both parameters increasing with total annual direct costs until they peak at around $700,000. These observations provide support for our previously developed policy on the support of research in well-funded laboratories.”

Many of the well-funded laboratories are well-funded because they are working in consortium-based community resource projects. Often, the huge amount of work done for these projects is represented in one or a few very highly cited papers, with many authors. These contributions would be under-represented in your graph. Probably more importantly, the value of these projects would most often be represented in the publications of others that use the data or resource, which is often not cited at all or cited unconventionally, and also would not be represented in your plots. In general, the policy for well-funded laboratories should be very careful not to penalize labs for participating in large community resource projects.

Just thought I’d say that for the record.

The NIGMS well-funded laboratory policy provides a process when considering additional funding to well-funded laboratories. The default is that a new application from a well-funded investigator will not be funded but many exceptions are made based on analysis by our program staff and our advisory council. Applications from investigators who lead community resource projects is a common exception. As you note, the publication metrics used in this analysis may also underestimate the true impact of such projects.

Again, many thanks for sharing your analyses, Dr. Berg. This is important information.

Would you be willing to re-plot the data, with the ordinate axis normalised to publications or impact factor per dollar funded?

It requires more money per paper to generate a GlamourMag level publication, of course. So some of that flattening may reflect a shift in lab goals from actually doing science to pursuing GlamourMag pubs for their own sake.

Question for Director Berg- Is the NIGMS interested in the relative fraction of data generated in the laboratory that ends up published versus not published simply because it is not cool enough for a high IF journal submission? Personally I would think this should be an area of concern for the NIH, the degree to which current competitive practices within science end up giving you *less* bang for the buck.

As Richard Clark notes above, do holders of 3 R01s produce 3x the publications of investigators with only one? And do they inflate the numbers by citing multiple grants on single publications?

Can the publication analysis be tested/corrected for these situations?

This is indeed a very important point. Double-dipping on publications in progress reports from well-funded labs is a common and, unfortunately, overlooked practice. Officially acknowledged support from multiple grants of the same PI in a publication is in fact irrefutable evidence of scientific overlap, which should raise a red flag for potential misconduct – making a false statement no overlap to government funding agency. To Jeremy: Now that NIH-funded publications are deposited in PMC, why not tracking double-dipping and initiating inquiries? Also, maybe study section scientific reviewers should be instructed to identify double-dipping in their critiques.

On another note, why not just do away with NIH progress reports and replace them with some sort of analysis like the one in your post? It would save time for grantees, reviewers, and program officers alike. It would also provide a better metric of grant “deliverables” than a rambling narrative about what was found and not found.

As noted by several already, the basic point of the charts will shock. But it is good to see a serious attempt to objectify it.

Let’s not forget some research simply costs more. Primate-based work might be the best example of such basic science? But such work is no more productive, so it might look like “poor value”?

Nature interviewed Dr. Berg and others about this analysis. Read the article at

Let’s face it, rarely do scientists have managing backgrounds, some have a hard time with personal hygiene. It sometimes boggles my mind to see someone handle millions of dollars of taxpayer money in such inefficient ways. I have seen this numbers above almost always be true when a grant involves multi group multi lab collaboration, it always falls apart…

A related concern is the serious lack of mentoring received by post-docs and grad students in large labs. Commonly the papers in large labs are ghostwritten by grad students and post-docs with very little input from the PIs. I wish the NIH would have addressed this lack of mentoring and career development instead of focusing on the Early Independence Award Program.

um… dunno what tradition you are coming from but in NIH funded biomedical research the grad students and postdocs are *supposed* to be doing the writing. this is not “ghostwriting”!

I’ve crunched the numbers in my own laboratory – it typically takes 4-5 years from the beginning of a project to it being accepted for publication. I love the idea of productivity being based on quantitative measurements of papers and citations but the lag time is so long that submitting a list of papers as a progress report would not be helpful for a 4 year grant… unless the funding model changed to fund a given researcher for 5 years based on the prior 5 years’ productivity.

Really great research. Is there any chance I can have the RAW data?

Thanks,

Travis

It seems at first glance that productivity per grant dollar is dramatically lower in labs that are highly funded: grants at $800K produce not quite twice as many papers as grants at $150K. However, as one commenter noted, it is difficult to separate funds for animals or equipment from funds for personnel. It would be interesting to separate those expenses, and to plot the graph as a function of personnel costs. Given the differences in publication practices in the many scientific cultures receiving NIH funds, would it be possible to separate say medicinal chemistry from structural biology, from neuroscience, from genomics, etc?

As another commenter pointed out, lasting scientific impact is very different from high-visibility journal publications. The only other study I’ve read (about physics departments) suggested funding level was uncorrelated with lasting scientific merit.

These admittedly very imperfect measures are at least a starting point for reconsideration of current grant practices. I hope that others will follow up this effort.

Having more money may allow labs to take bigger risks on their design of experiments. These high risk experiments may yield fewer papers per $ but they may yield more highly important papers.

These data come as a surprise to me. I had always believed that there was an inverse correlation between funding dollars and scientific productivity — scientists with less dollars focus energy on doing science while those with more dollars spend all their time on writing grants and doing no science. Does that make sense? Impact factor is also an oxymoron and a very poor measure of scientific productivity. Some of the best science done appears in more specialized journals with low impact factors, while high impact factor journals frequently publish wishy-washy science that is in vogue rather than of true fundamental value.

I posted an extension of this analysis with points corresponding to individual investigators rather than the binned values shown here.

Although Dr. Berg’s study provides an interesting data point on

scientific productivity, I would like to question the assumption that

counting scientific publications is an accurate way of measuring

scientific output and impact. Are all scientific publications of

equal significance? Clearly: No. Consider the least publishable

unit, and consider the possibility that labs with higher funding are

producing longer, denser, more informative, more significant, and

higher impact publications. For example, consider two groups

performing clinical trials research, one of which has three times the

budget of the other. They might both publish a single paper at the

end, but the group with more funding might have performed a longer

study of larger populations, and attained higher statistical

significance in their results, across a more diverse patient

population. Counting publications does not provide an accurate

measure of scientific output or scientific impact.

Looks like you would get 3X as much research productivity by funding four labs @ $200K each rather than funding one lab @ $800K, with only small differences in journal impact factor.

It might be interesting to check [my] paper on “impact per dollar” [in Current Science from the Indian Academy of Sciences].

It’s striking that most publications are around the 5 IF value.

So, I wonder what are the implications of this for investigators and NIH?

Because it would mean that most productivity/$ didn’t reach much of the audience. But, it depends of what the priority audience is.