Last week, President Obama announced the 2010 recipients of the National Medal of Science and the National Medal of Technology and Innovation. The 10 winners of the National Medal of Science include long-time NIGMS grantees Steve Benkovic from Pennsylvania State University and Susan Lindquist from the Whitehead Institute, MIT. As always, I am pleased when our grantees are among the outstanding scientists and innovators recognized by the President in this significant way.

Category: Director’s Messages

Nobel News

The Royal Swedish Academy of Sciences announced today that long-time NIGMS grantee Ei-ichi Negishi from Purdue University will share the Nobel Prize in chemistry with Richard Heck from the University of Delaware and Akiri Suzuki from Hokkaido University in Japan for “palladium-catalyzed cross couplings in organic synthesis.” All of us at NIGMS congratulate them on this outstanding recognition of their accomplishments.

Carbon-carbon bond-forming reactions are the cornerstone of organic synthesis, and the reactions developed by these Nobelists are widely used to produce a range of substances, from medicines and other biologically active compounds to plastics and electronic components. NIGMS supports a substantial portfolio of grants directed toward the development of new synthetic methods precisely because of the large impact these methods can have.

I have personal experience with similar methods. I am a synthetic inorganic chemist by training, and a key step during my Ph.D. training was getting a carbon-carbon bond-forming reaction to work (using a reaction not directly related to today’s Nobel Prize announcement). I spent many months trying various reaction schemes, and my eventual success was really the “transition state” for my Ph.D. thesis: Within a month of getting this reaction to work, it was clear that I would be Dr. Berg sooner rather than later!

I’d also like to note that this year’s Nobel Prize in physiology or medicine to Robert Edwards “for the development of in vitro fertilization” also appears to have an NIGMS connection. Roger Donahue sent me a paper he coauthored with Edwards, Theodore Baramki and Howard Jones titled “Preliminary attempts to fertilize human oocytes matured in vitro.” This paper stemmed from a short fellowship that Edwards did at Johns Hopkins in 1964. Referencing the paper in an account of the development of IVF, Jones notes that, “No fertilization was claimed but, in retrospect looking at some of the photographs published in that journal (referring to the paper above), it is indeed likely that human fertilization was achieved at Johns Hopkins Hospital in the summer of 1964.” The paper cites NIGMS support for this work through grants to Victor McKusick.

In all, NIGMS has supported the prizewinning work of 74 grantees, 36 of whom are Nobel laureates in chemistry.

NIH-Wide Correlations Between Overall Impact Scores and Criterion Scores

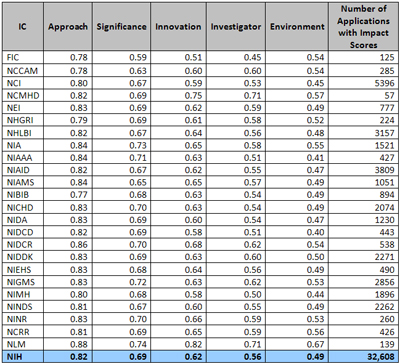

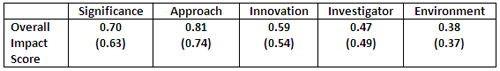

In a recent post, I presented correlations between the overall impact scores and the five individual criterion scores for sample sets of NIGMS applications. I also noted that the NIH Office of Extramural Research (OER) was performing similar analyses for applications across NIH.

OER’s Division of Information Services has now analyzed 32,608 applications (including research project grant, research center and SBIR/STTR applications) that were discussed and received overall impact scores during the October, January and May Council rounds in Fiscal Year 2010. Here are the results by institute and center:

This analysis reveals the same trends in correlation coefficients observed in smaller data sets of NIGMS R01 grant applications. Furthermore, no significant differences were observed in the correlation coefficients among the 24 NIH institutes and centers with funding authority.

Measuring the Scientific Output and Impact of NIGMS Grants

A frequent topic of discussion at our Advisory Council meetings—and across NIH—is how to measure scientific output in ways that effectively capture scientific impact. We have been working on such issues with staff of the Division of Information Services in the NIH Office of Extramural Research. As a result of their efforts, as well as those of several individual institutes, we now have tools that link publications to the grants that funded them.

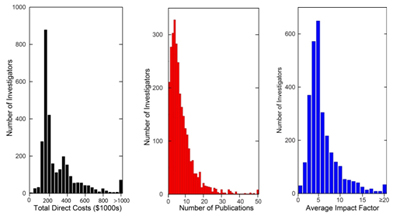

Using these tools, we have compiled three types of data on the pool of investigators who held at least one NIGMS grant in Fiscal Year 2006. We determined each investigator’s total NIH R01 or P01 funding for that year. We also calculated the total number of publications linked to these grants from 2007 to mid-2010 and the average impact factor for the journals in which these papers appeared. We used impact factors in place of citations because the time dependence of citations makes them significantly more complicated to use.

I presented some of the results of our analysis of this data at last week’s Advisory Council meeting. Here are the distributions for the three parameters for the 2,938 investigators in the sample set:

For this population, the median annual total direct cost was $220,000, the median number of grant-linked publications was six and the median journal average impact factor was 5.5.

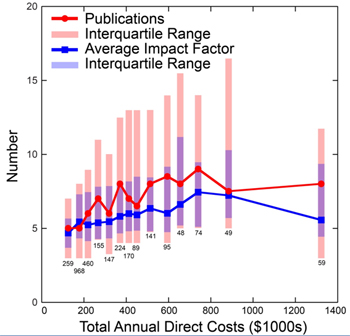

A plot of the median number of grant-linked publications and median journal average impact factors versus grant total annual direct costs is shown below.

This plot reveals several important points. The ranges in the number of publications and average impact factors within each total annual direct cost bin are quite large. This partly reflects variations in investigator productivity as measured by these parameters, but it also reflects variations in publication patterns among fields and other factors.

Nonetheless, clear trends are evident in the averages for the binned groups, with both parameters increasing with total annual direct costs until they peak at around $700,000. These observations provide support for our previously developed policy on the support of research in well-funded laboratories. This policy helps us use Institute resources as optimally as possible in supporting the overall biomedical research enterprise.

This is a preliminary analysis, and the results should be viewed with some skepticism given the metrics used, the challenges of capturing publications associated with particular grants, the lack of inclusion of funding from non-NIH sources and other considerations. Even with these caveats, the analysis does provide some insight into the NIGMS grant portfolio and indicates some of the questions that can be addressed with the new tools that NIH is developing.

Scoring Analysis with Funding and Investigator Status

My previous post generated interest in seeing the results coded to identify new investigators and early stage investigators. Recall that new investigators are defined as individuals who have not previously competed successfully as program director/principal investigator for a substantial NIH independent research award. Early stage investigators are defined as new investigators who are within 10 years of completing the terminal research degree or medical residency (or the equivalent).

Below is a plot for 655 NIGMS R01 applications reviewed during the January 2010 Council round.

This plot reveals that many of the awards made for applications with less favorable percentile scores go to early stage and new investigators. This is consistent with recent NIH policies.

The plot also partially reveals the distribution of applications from different classes of applicants. This distribution is more readily seen in the plot below.

This plot shows that competing renewal (Type 2) applications from established investigators represent the largest class in the pool and receive more favorable percentile scores than do applications from other classes of investigators. The plot also shows that applications from early stage investigators have a score distribution that is quite similar to that for established investigators submitting new applications. The curve for new investigators who are not early stage investigators is similar as well, although the new investigator curve is shifted somewhat toward less favorable percentile scores.

Scoring Analysis with Funding Status

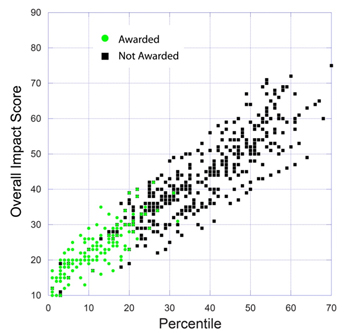

In response to a previous post, a reader requested a plot showing impact score versus percentile for applications for which funding decisions have been made. Below is a plot for 655 NIGMS R01 applications reviewed during the January 2010 Council round.

This plot confirms that the percentile representing the halfway point of the funding curve is slightly above the 20th percentile, as expected from previously posted data.

Notice that there is a small number of applications with percentile scores better than the 20th percentile for which awards have not been made. Most of these correspond to new (Type 1, not competing renewal) applications that are subject to the NIGMS Council’s funding decision guidelines for well-funded laboratories.

New NIH Principal Deputy Director

NIH Director Francis Collins recently named Larry Tabak as the NIH principal deputy director. Raynard Kington previously held this key position.

NIH Director Francis Collins recently named Larry Tabak as the NIH principal deputy director. Raynard Kington previously held this key position.

Over the years, I have worked closely with Dr. Tabak in many settings, including the Enhancing Peer Review initiative. A biochemist who continues to do research in the field of glycobiology, he is a firm supporter of investigator-initiated research and basic science. He is also a good listener and a creative problem solver.

Dr. Tabak, who has both D.D.S. and Ph.D. degrees, has directed NIH’s National Institute of Dental and Craniofacial Research for the past decade. In 2009, Dr. Kington—who had stepped in as acting director of NIH following the departure of Elias Zerhouni—tapped Dr. Tabak to be his acting deputy. Dr. Tabak’s achievements included playing an integral role in NIH Recovery Act activities.

Given the challenging issues that the principal deputy director often works on, Dr. Tabak’s experience—from dentist and bench scientist to scientific administrator—clearly provides him with valuable tools for the job. His experience as an endodontist may be particularly useful in some situations, allowing him to identify and “treat” potentially serious issues.

Scoring Analysis: 1-Year Comparison

I recently posted several analyses (on July 15, July 19 and July 21) of the relationships between the overall impact scores on R01 applications determined by study sections and the criterion scores assigned by individual reviewers. These analyses were based on a sample of NIGMS applications reviewed during the October 2009 Council round. This was the first batch of applications for which criterion scores were used.

NIGMS applications for the October 2010 Council round have now been reviewed. Here I present my initial analyses of this data set, which consists of 654 R01 applications that were discussed, scored and percentiled.

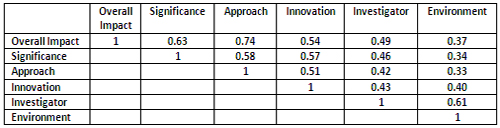

The first analysis, shown below, relates to the correlation coefficients between the overall impact score and the averaged individual criterion scores.

Overall, the trend in correlation coefficients is similar to that observed for the sample from 1 year ago, although the correlation coefficients for the current sample are slightly higher for four out of the five criterion scores.

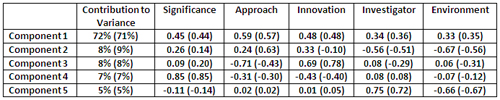

Here are results from a principal component analysis:

There is remarkable agreement between the results of the principal component analysis for the October 2010 data set and those for the October 2009 data set. The first principal component accounts for 72% of the variance, with the largest contribution coming from approach, followed by innovation, significance, investigator and finally environment. This agreement between the data sets extends through all five principal components, although there is somewhat more variation for principal components 2 and 3 than for the others.

Another important factor in making funding decisions is the percentile assigned to a given application. The percentile is a ranking that shows the relative position of each application’s score among all scores assigned by a study section at its last three meetings. Percentiles provide a way to compare applications reviewed by different study sections that may have different scoring behaviors. They also correct for “grade inflation” or “score creep” in the event that study sections assign better scores over time.

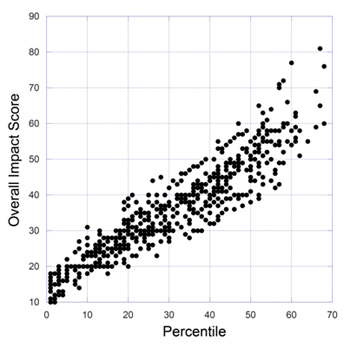

Here is a plot of percentiles and overall impact scores:

This plot reveals that a substantial range of overall impact scores can be assigned to a given percentile score. This phenomenon is not new; a comparable level of variation among study sections was seen in the previous scoring system, as well.

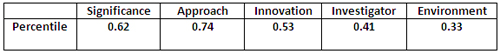

The correlation coefficient between the percentile and overall impact score in this data set is 0.93. The correlation coefficients between the percentile and the averaged individual criterion scores are given below:

As one would anticipate, these correlation coefficients are somewhat lower than those for the overall impact score since the percentile takes other factors into account.

The results of a principal component analysis applied to the percentile data show:

The results of this analysis are very similar to those for the overall impact scores, with the first principal component accounting for 72% of the variance and similar weights for the individual averaged criterion scores.

Our posting of these scoring analyses has led the NIH Office of Extramural Activities and individual institutes to launch their own analyses. I will share their results as they become available.

Even More on Criterion Scores: Full Regression and Principal Component Analyses

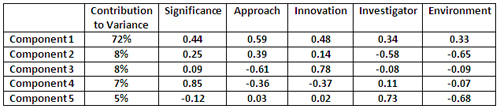

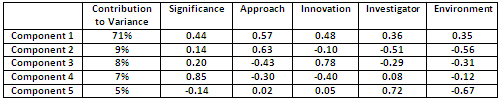

After reading yesterday’s post, a Feedback Loop reader asked for a full regression analysis of the overall impact score based on all five criterion scores. With the caveat that one should be cautious in over-interpreting such analyses, here it is:

As one might expect, the various parameters are substantially correlated.

A principal component analysis reveals that a single principal component accounts for 71% of the variance in the overall impact scores. This principal component includes substantial contributions from all five criterion scores, with weights of 0.57 for approach, 0.48 for innovation, 0.44 for significance, 0.36 for investigator and 0.35 for environment.

Here are more results of the full principal component analysis:

The second component accounts for an additional 9% of the variance and has a substantial contribution from approach, with significant contributions of the opposite sign for investigator and environment. The third component accounts for an additional 8% of the variance and appears to be primarily related to innovation. The fourth component accounts for an additional 7% of the variance and is primarily related to significance. The final component accounts for the remaining 5% of the variance and has contributions from investigator and environment of the opposite sign.

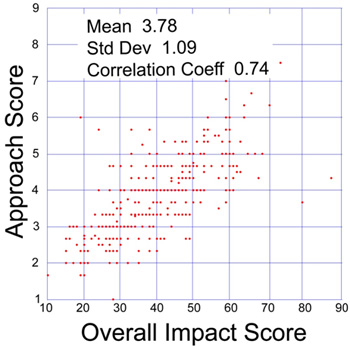

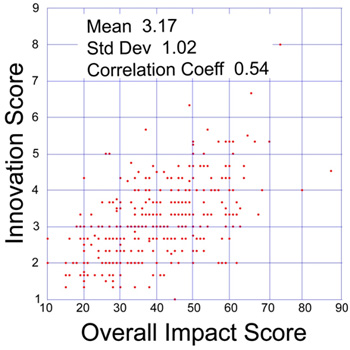

More on Criterion Scores

In an earlier post, I presented an analysis of the relationship between the average significance criterion scores provided independently by individual reviewers and the overall impact scores determined at the end of the study section discussion for a sample of 360 NIGMS R01 grant applications reviewed during the October 2009 Council round. Based on the interest in this analysis reflected here and on other blogs, including DrugMonkey and Medical Writing, Editing & Grantsmanship, I want to provide some additional aspects of this analysis.

As I noted in the recent post, the criterion score most strongly correlated (0.74) with the overall impact score is approach. Here is a plot showing this correlation:

Similarly, here is a plot comparing the average innovation criterion score and the overall impact score:

Note that the overall impact score is NOT derived by combining the individual criterion scores. This policy is based on several considerations, including:

- The effect of the individual criterion scores on the overall impact score is expected to depend on the nature of the project. For example, an application directed toward developing a community resource may not be highly innovative; indeed, a high level of innovation may be undesirable in this context. Nonetheless, such a project may receive a high overall impact score if the approach and significance are strong.

- The overall impact score is refined over the course of a study section discussion, whereas the individual criterion scores are not.

That being said, it is still possible to derive the average behavior of the study sections involved in reviewing these applications from their scores. The correlation coefficient for the linear combination of individual criterion scores with weighting factors optimized (approximately related to the correlation coefficients between the individual criterion scores and the overall impact factor) is 0.78.

The availability of individual criterion scores provides useful data for analyzing study section behavior. In addition, these criterion scores are important parameters that can assist program staff in making funding recommendations.