UPDATE: The MIRA FOA for early stage investigators has been reissued.

We have begun making grant awards resulting from responses to RFA-GM-16-003 (R35), the Maximizing Investigators’ Research Award (MIRA) for New and Early Stage Investigators pilot program. We received 320 applications in areas related to NIGMS’ mission, and they were reviewed by four special emphasis panels organized by the NIH Center for Scientific Review. We anticipate making 93 awards, which is more than we estimated in the funding opportunity announcement (FOA); the corresponding success rate is 29.1%.

The awards will be for a 5-year project period, as is typically the case for NIGMS R01 awards to new and early stage investigators. Most awards will be for the requested and maximum amount of $250,000 in annual direct costs, with an average of $239,000 and median of $250,000. In Fiscal Year 2015, NIGMS R01 awards to new or early stage investigators averaged $209,000 in annual direct costs (median of $198,000) and had a 24.4% success rate. During the same period, all competing NIGMS R01 awards averaged $236,000 in annual direct costs (median of $210,000) and had a 28.8% success rate. Thus, the MIRA pilot program had success rates similar to those of comparable R01 applications and offered some direct financial benefit to this group of applicants. We expect other benefits of the MIRA program, including increased funding stability and research flexibility, reductions in time spent writing and reviewing grant applications and improved distribution of NIGMS funding, will accrue among these investigators and the community at large as implementation of the MIRA program continues.

You can find more information about the awards on NIH RePORTER by entering RFA-GM-16-003 in the FOA field; however, the record of funded grants will not be complete until after the end of Fiscal Year 2016 (September 30). Because the initial budget period of MIRA awards will be offset by existing NIGMS grant support from other mechanisms (e.g., career awards), the first-year budget of a MIRA may be lower than the annual funding level used to calculate the average and median amounts shown above. We plan to post a detailed analysis of MIRAs after we have issued all the awards. We’ve previously posted information on NIGMS R01 award sizes and success rates for new and early stage investigators.

As I mentioned in my last post, we’re planning to reissue the MIRA FOA for early stage investigators in the near future.

You can find additional information about the program on our MIRA web page.

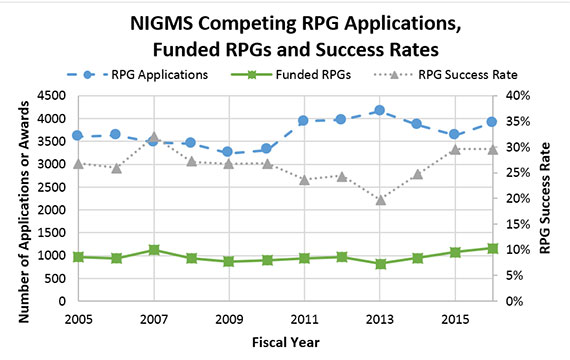

, was signed into law. The appropriation provides NIGMS with a budget of $2,937,218,000 in Fiscal Year (FY) 2020, a 2.2% increase over the FY 2019 appropriation. With this increased budget, NIGMS is committed to providing taxpayers with the best possible returns on their investments in fundamental biomedical research [PDF]. As part of this commitment to stewardship [PDF], we regularly monitor trends in our funding portfolio.

, was signed into law. The appropriation provides NIGMS with a budget of $2,937,218,000 in Fiscal Year (FY) 2020, a 2.2% increase over the FY 2019 appropriation. With this increased budget, NIGMS is committed to providing taxpayers with the best possible returns on their investments in fundamental biomedical research [PDF]. As part of this commitment to stewardship [PDF], we regularly monitor trends in our funding portfolio.